Day4: Data Preprocessing

📑 Table of Contents

- 🌟 Welcome to Day 4

- 🧹 What is Data Preprocessing?

- 🔑 Key Steps in Data Preprocessing

- Handling Missing Values

- Encoding Categorical Features

- Feature Scaling and Normalization

- Dealing with Outliers

- Feature Selection and Engineering

- Train-Test Splitting

- 🏗️ Practical Techniques and Code Examples

- Imputation

- One-Hot Encoding

- Standardization and Min-Max Scaling

- Detecting and Handling Outliers

- Feature Selection with Variance Threshold

- Train-Test Split

- 🔍 Exploratory Data Analysis (EDA) Integration

- 💻 Practical Examples and Use Cases

- 📚 Resources

- 💡 Tips and Tricks

1. 🌟 Welcome to Day 4

Welcome to Day 4 of your 90-day machine learning journey! Today, we delve deep into Data Preprocessing, one of the most critical phases in building a successful Machine Learning (ML) pipeline. High-quality data preprocessing can mean the difference between a mediocre model and a high-performing one. From handling missing values to scaling features, you’ll learn techniques that ensure your models see the data in the best possible light.

Preprocessing is not just a step—it’s an art. By the end of today, you’ll understand how to systematically transform raw, messy datasets into clean, structured ones ready for modeling!

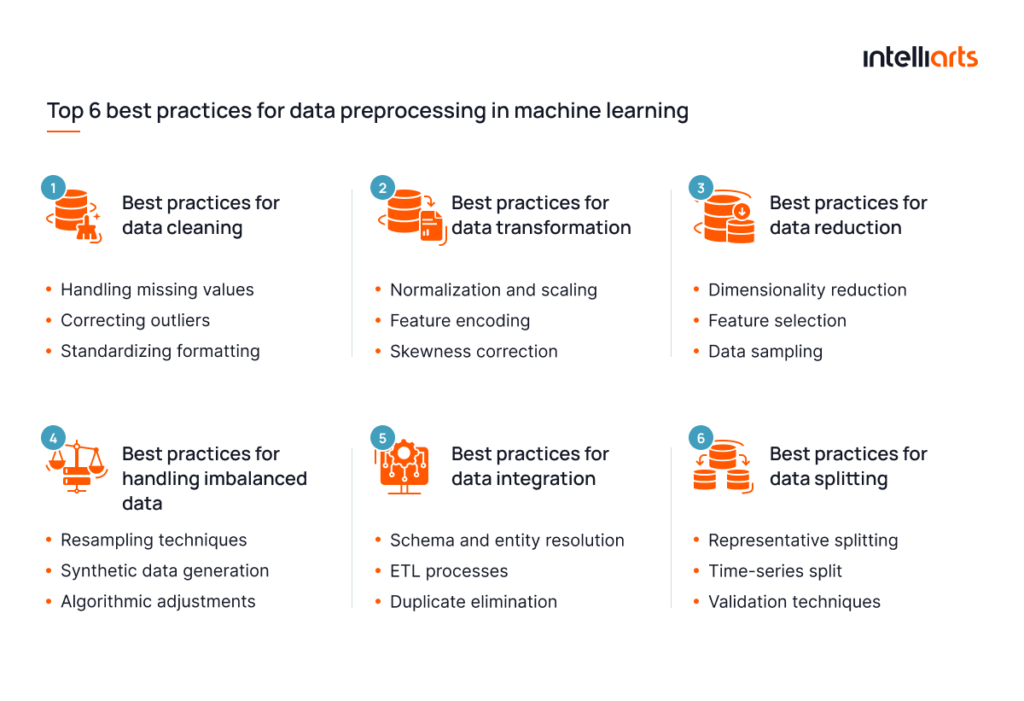

2. 🧹 What is Data Preprocessing?

Data Preprocessing involves transforming raw data into a more understandable and usable format. Real-world data is often messy—missing values, inconsistent formats, categorical strings, and outliers are common headaches. Preprocessing tackles these issues head-on, leading to more stable and accurate models.

Key Benefits:

- Improved Model Accuracy: Cleaner input leads to better predictions.

- Reduced Noise and Bias: Outliers and inconsistent data can skew models.

- Enhanced Model Generalization: Proper scaling, encoding, and selection of features help models generalize well to unseen data.

Related Image (Data Cleaning Illustration):

(Image Source: Data Cleaning: What It Is, Procedure, Best Practices | Airbyte)

3. 🔑 Key Steps in Data Preprocessing

Each preprocessing step addresses a specific challenge, ensuring you deliver well-structured data to your model.

📝 Handling Missing Values

Real datasets often have incomplete information. Consider a housing dataset where some entries lack the number of bedrooms. Removing these rows wastes data, while leaving them as is can confuse the model.

Techniques:

- Mean/Median/Mode Imputation: Replace missing values with a central tendency measure.

- KNN Imputation: Estimate missing values based on similar data points.

- Advanced Methods: Iterative imputation or model-based approaches.

Related Image (Missing Data):

(Image Source: wikimedia)

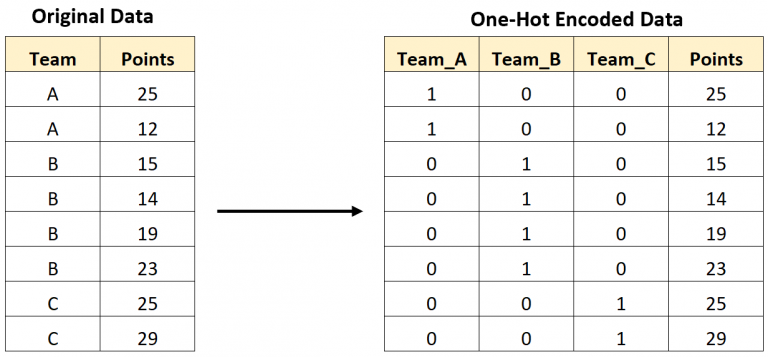

📝 Encoding Categorical Features

Models generally work with numerical values. Categorical features (e.g., “Red”, “Blue”, “Green”) must be encoded numerically.

Techniques:

- One-Hot Encoding: Creates binary columns for each category.

- Label Encoding: Assigns each category an integer value.

- Ordinal Encoding: For categories with an inherent order (e.g., “Small”, “Medium”, “Large”).

Related Image (One-Hot Encoding):

(Image Source: MachineLearningTheory)

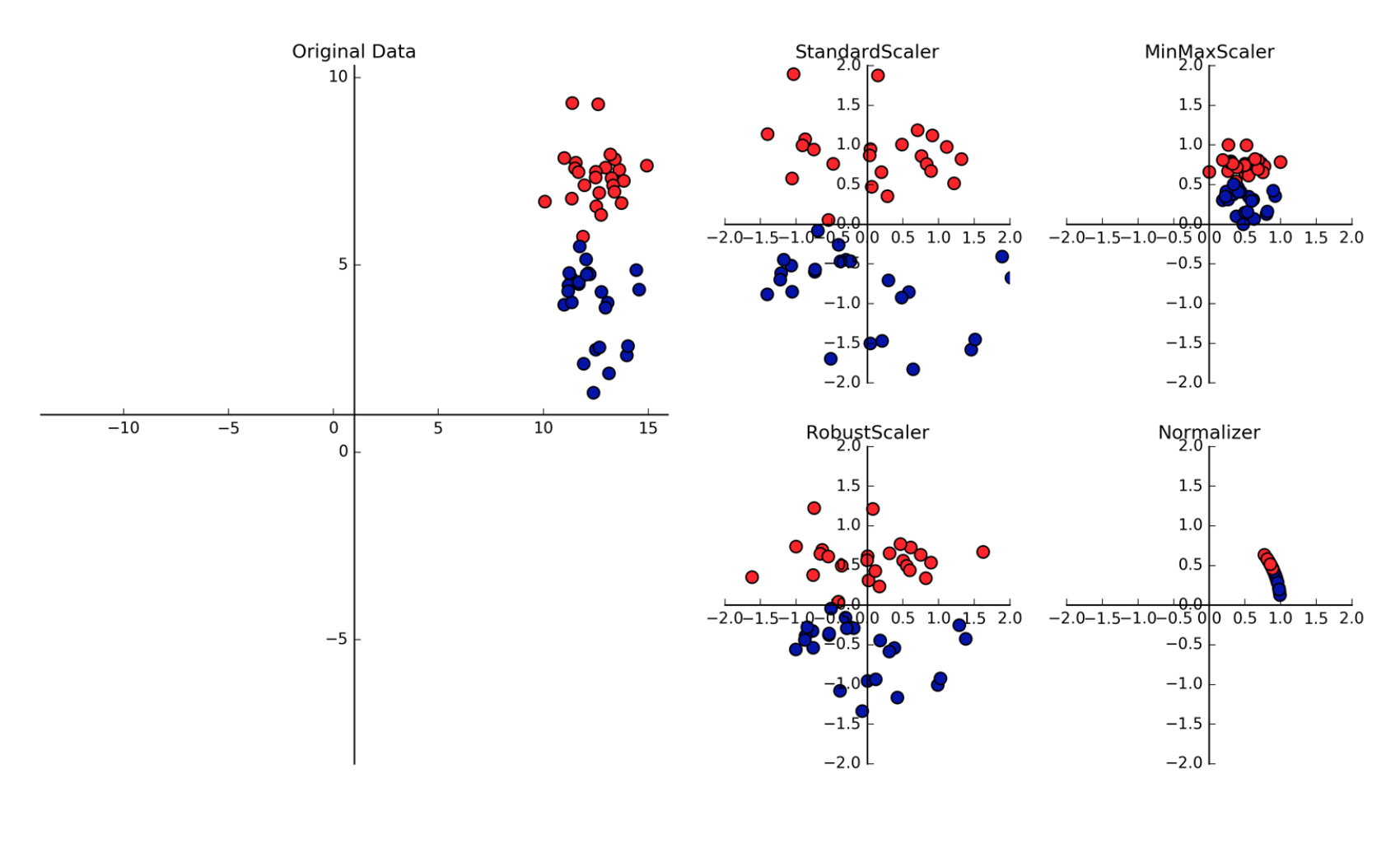

📝 Feature Scaling and Normalization

If one feature ranges from 0 to 1 and another from 0 to 10,000, the latter might dominate the model’s learning process. Scaling levels the playing field.

Techniques:

- Standardization (Z-score): Transforms features to have zero mean and unit variance.

- Min-Max Scaling: Rescales features to a [0, 1] range.

- Robust Scaling: Less sensitive to outliers.

Related Image (Feature Scaling Concept):

(Image Source: Python Data Science)

📝 Dealing with Outliers

Outliers can distort the data’s representation. Consider a salary dataset where most salaries range between $50k and $100k, but one entry is $1 million—this outlier could mislead the model.

Techniques:

- Removing Outliers: Drop outlier rows if they’re errors.

- Winsorizing: Cap extreme values at a specified percentile.

- Use Robust Estimators: Methods less influenced by outliers (e.g., median-based measures).

Related Image (Box Plot Outliers):

(Image Source: Analyticsvidhya)

📝 Feature Selection and Engineering

Not all features are helpful. Redundant or irrelevant features can add noise and slow down training.

Techniques:

- Variance Threshold: Remove features with little variation.

- SelectKBest: Pick top features based on statistical tests.

- PCA: Combine correlated features into fewer dimensions.

- Manual Feature Engineering: Domain knowledge can guide the creation of new, more informative features.

Related Image (Feature Selection Concept):

(Image Source: Wallstreetmojo)

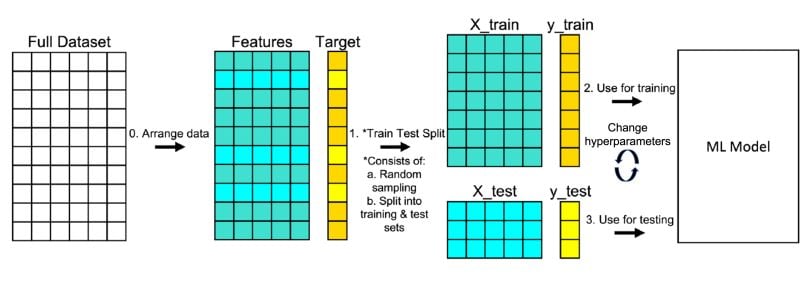

📝 Train-Test Splitting

To validate how well your model generalizes, split data into training and testing sets before training. This ensures honest evaluation.

Technique:

train_test_splitfunction in scikit-learn.

Related Image (Train-Test Split):

4. 🏗️ Practical Techniques and Code Examples

Let’s explore some common preprocessing steps in Python with scikit-learn:

📝 Imputation

Replace missing values with the mean:

import numpy as np

from sklearn.impute import SimpleImputer

X = np.array([[1, 2, np.nan],

[3, np.nan, 6],

[7, 8, 9]])

imputer = SimpleImputer(strategy='mean')

X_imputed = imputer.fit_transform(X)

print(X_imputed)

📝 One-Hot Encoding

Convert categorical values into binary vectors:

from sklearn.preprocessing import OneHotEncoder

X_cat = np.array([['Red'], ['Blue'], ['Red'], ['Green']])

encoder = OneHotEncoder(sparse=False)

X_encoded = encoder.fit_transform(X_cat)

print(X_encoded)

📝 Standardization and Min-Max Scaling

Bring features to comparable scales:

from sklearn.preprocessing import StandardScaler, MinMaxScaler

X_num = np.array([[10],[20],[30],[40],[50]], dtype=float)

scaler_std = StandardScaler()

X_std = scaler_std.fit_transform(X_num)

scaler_mm = MinMaxScaler()

X_mm = scaler_mm.fit_transform(X_num)

print("Standardized:\n", X_std)

print("Min-Max Scaled:\n", X_mm)

📝 Detecting and Handling Outliers

Identify and remove outliers using the IQR method:

import pandas as pd

X_df = pd.DataFrame({'Feature':[1,2,2,100,3,2]})

q1 = X_df['Feature'].quantile(0.25)

q3 = X_df['Feature'].quantile(0.75)

iqr = q3 - q1

lower_bound = q1 - 1.5*iqr

upper_bound = q3 + 1.5*iqr

X_no_outliers = X_df[(X_df['Feature'] >= lower_bound) & (X_df['Feature'] <= upper_bound)]

print(X_no_outliers)

📝 Feature Selection with Variance Threshold

Remove features with low variance:

from sklearn.feature_selection import VarianceThreshold

X = np.array([[0,1,2],

[0,1,2],

[0,1,3]])

selector = VarianceThreshold(threshold=0.0)

X_selected = selector.fit_transform(X)

print(X_selected)

📝 Train-Test Split

Ensure unbiased evaluation of model performance:

from sklearn.model_selection import train_test_split

X = np.arange(20).reshape(10,2)

y = np.arange(10)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

print("Train size:", X_train.shape, "Test size:", X_test.shape)

5. 🔍 Exploratory Data Analysis (EDA) Integration

Before Preprocessing:

Use EDA to understand your data’s underlying structure. Identify which features have missing values, distributions that need scaling, or suspicious outliers. EDA guides your preprocessing decisions, ensuring that you don’t blindly transform data without context.

Visual tools like histograms, box plots, and scatter matrices can highlight:

- Feature distributions

- Presence of missing values

- Potential outliers

- Correlations between features

Related Image (EDA Visualization):

(Image Source: Wikimedia)

6. 💻 Practical Examples and Use Cases

-

House Price Prediction:

Impute missing data (lot size), encode categorical features (location, style), scale numerical features (area, price per sq ft), and remove extreme outliers (unusually large mansions). Result: A cleaner dataset that leads to better regression accuracy. -

Customer Churn Analysis:

Encode categorical variables (customer region), handle missing demographic info (impute median age), and select top features influencing churn (tenure, contract type). Proper preprocessing increases the model’s ability to distinguish churners from loyal customers. -

Medical Diagnosis:

Remove outliers from lab measurements, standardize test results (blood pressure, cholesterol levels), and select the most informative biomarkers. This ensures that your classification model can accurately diagnose conditions.

Related Image (Data Preprocessing Use Case):

7. 📚 Resources

- Documentation & Guides:

- Scikit-Learn Preprocessing Documentation: Official reference for preprocessing tools.

- Pandas Documentation: For data manipulation before and during preprocessing.

- Learning Platforms:

- Kaggle Datasets and Kernels: Explore community examples of data preprocessing.

- Data Cleaning with Python (Kaggle): A free micro-course.

- In-Depth Reading:

- Feature Engineering & Selection Book: Deep dive into advanced techniques.

- Online Courses:

- Coursera, Udemy, and edX offer comprehensive courses on Data Preprocessing and Data Wrangling.

8. 💡 Tips and Tricks

- Iterative Approach: Preprocessing is not a one-shot deal. Experiment, validate, and iterate.

- Pipelines: Wrap preprocessing steps in a

Pipelineto ensure reproducibility and simplify your workflow. - Domain Knowledge: Understand the context—some outliers may hold meaningful insights.

- Don’t Over-Engineer: While feature engineering can help, adding too many engineered features might overfit. Strike a balance.

- Validate Early and Often: After preprocessing, try simple models (like linear regression or decision trees) to see improvements before moving to complex models.

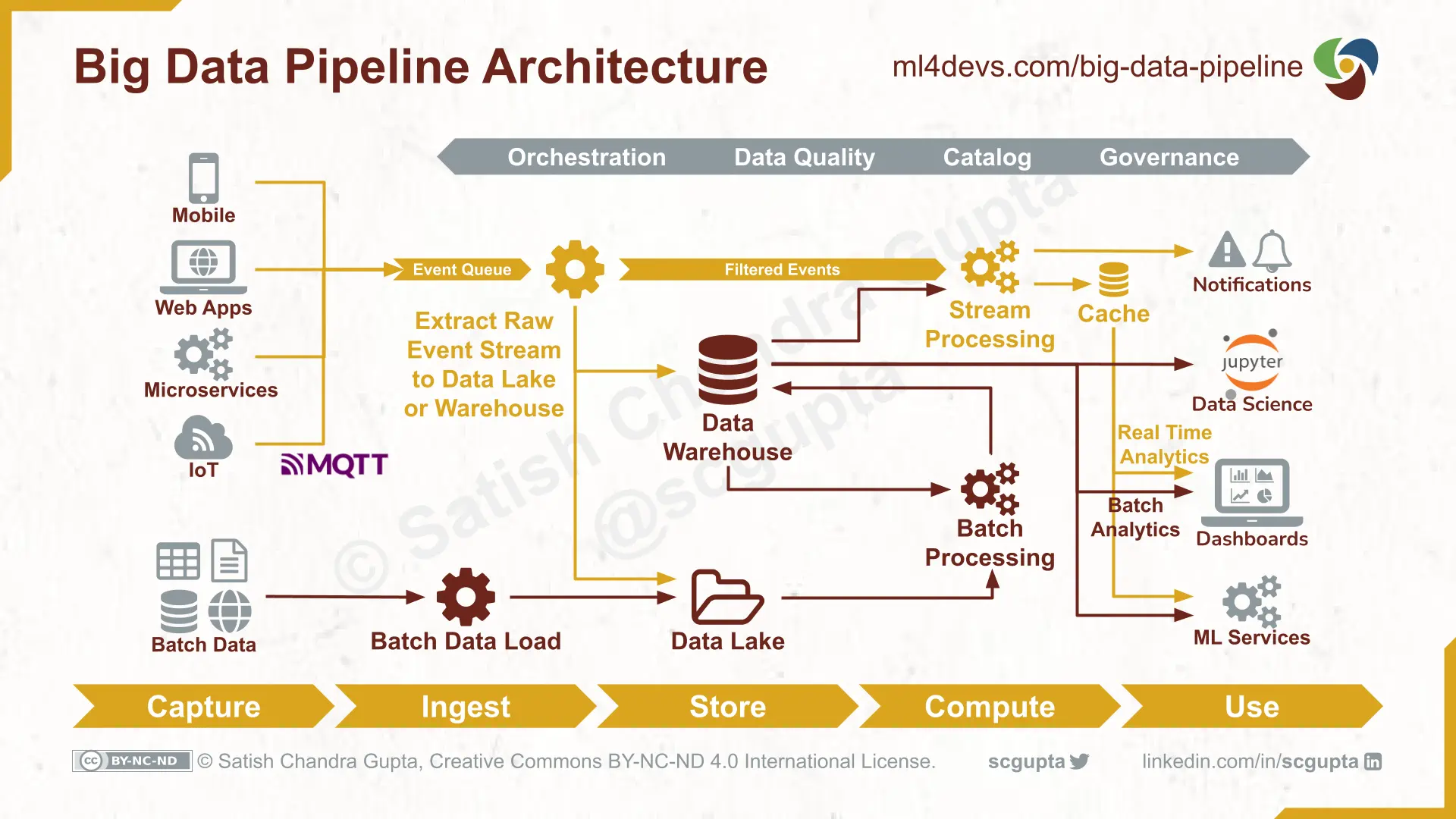

Related Image (Data Pipeline Concept):

(Image Source: ml4devs)

Conclusion: Mastering data preprocessing sets the stage for building powerful, accurate, and reliable machine learning models. By carefully cleaning, encoding, scaling, and selecting features, you provide your models with the best possible data to learn from. This step is often where the biggest gains in model performance are realized—so take your time, experiment with different strategies, and refine your preprocessing pipeline as you proceed on your journey!

Up next, we’ll explore more advanced topics and techniques to help you become a data science and machine learning expert! 🚀