🧠

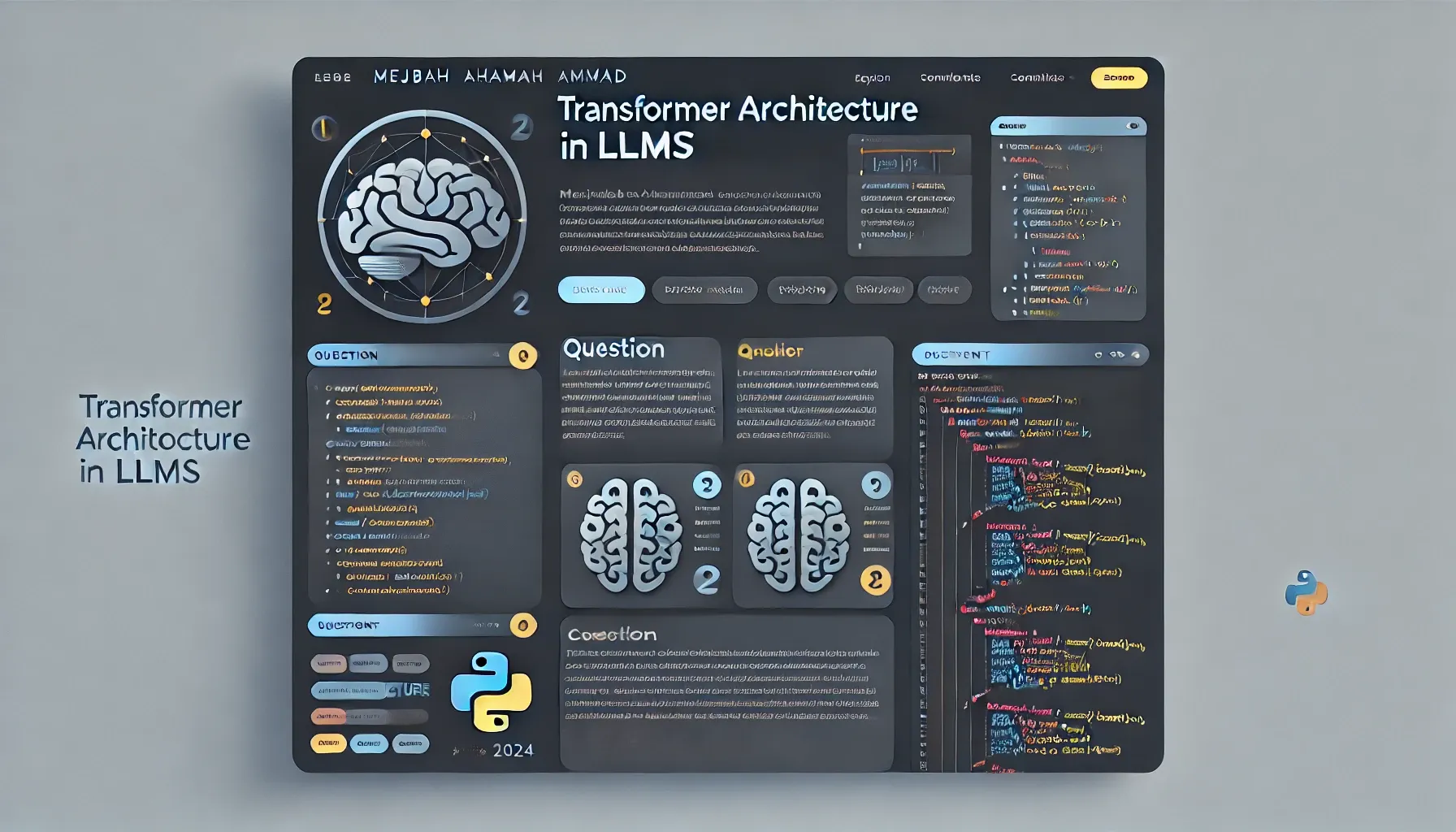

Transformer Architecture in LLMs

Question: What is the role of the Transformer architecture in Large Language Models (LLMs)?

Answer: The Transformer architecture is the backbone of modern Large Language Models (LLMs). It is designed to handle sequential data efficiently, allowing LLMs to understand and generate human-like text. The key components of the Transformer architecture include:

- Self-Attention Mechanism: Helps the model focus on relevant words in the input sequence.

- Positional Encoding: Retains the order of words in a sequence.

- Multi-Head Attention: Allows the model to attend to different parts of the sequence simultaneously.

- Feedforward Layers: Ensures nonlinear transformations of the data for better learning.

1

from transformers import AutoModel

2

# Load a pre-trained LLM like GPT

3

model = AutoModel.from_pretrained('gpt-3')

4

# Visualize the Transformer's self-attention layers

5

attention_heads = model.get_attention_scores()