1.5 Machine Learning Workflow

1.5 Machine Learning Workflow

Introduction

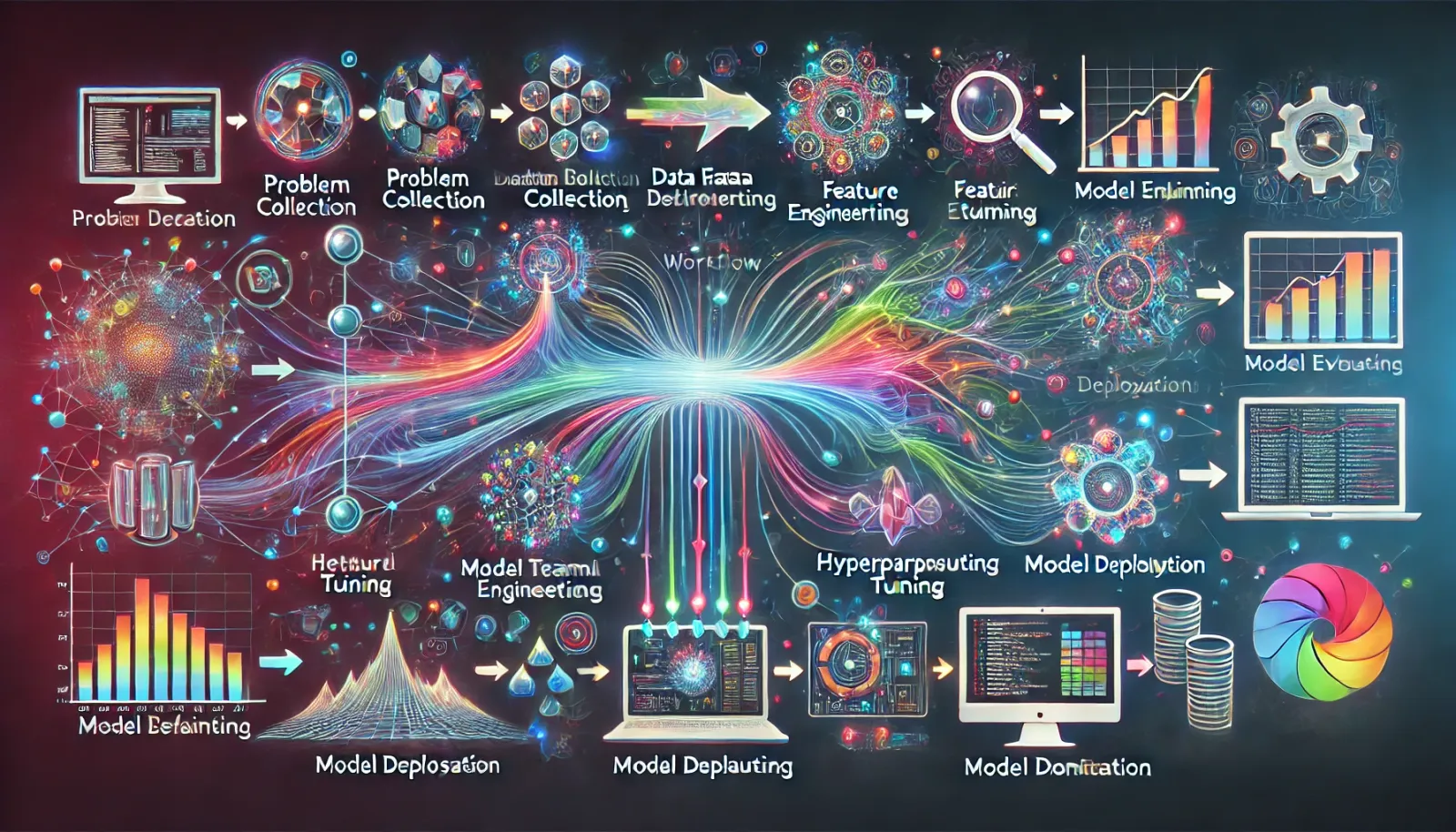

The machine learning workflow is a systematic process that guides the development, training, and deployment of machine learning models. It involves several stages, from data collection to model evaluation and deployment, ensuring that the resulting model is effective and reliable. This section outlines the key steps involved in a typical machine learning workflow.

1.5.1 Problem Definition

The first step in the machine learning workflow is to clearly define the problem you are trying to solve. This involves understanding the business or research objective and determining how machine learning can be applied to achieve it.

- Tasks:

- Define the problem statement.

- Identify the target variable (for supervised learning).

- Determine the success criteria for the model.

1.5.2 Data Collection

Data is the foundation of any machine learning project. In this step, relevant data is collected from various sources to be used in training the model.

- Tasks:

- Identify data sources (databases, APIs, sensors, etc.).

- Gather and store data in a suitable format.

- Ensure data is representative of the problem domain.

1.5.3 Data Preprocessing

Raw data often contains noise, missing values, and inconsistencies. Data preprocessing involves cleaning and transforming the data to make it suitable for model training.

- Tasks:

- Handle missing data (e.g., imputation, removal).

- Normalize or scale features.

- Encode categorical variables.

- Remove or treat outliers.

- Split the data into training, validation, and test sets.

1.5.4 Feature Engineering

Feature engineering is the process of selecting, modifying, or creating new features from the existing data that will improve model performance.

- Tasks:

- Select relevant features based on domain knowledge.

- Create new features (e.g., polynomial features, interaction terms).

- Perform dimensionality reduction if necessary (e.g., PCA).

1.5.5 Model Selection

Choosing the right model is crucial to the success of a machine learning project. This step involves selecting the appropriate algorithm(s) based on the problem type and data characteristics.

- Tasks:

- Compare different algorithms (e.g., linear models, decision trees, neural networks).

- Consider model complexity, interpretability, and computational efficiency.

- Use cross-validation to assess potential models.

1.5.6 Model Training

Once a model is selected, it is trained on the training dataset. During this process, the model learns to map inputs to outputs by minimizing the loss function.

- Tasks:

- Initialize model parameters.

- Train the model using the training dataset.

- Monitor training progress (e.g., loss, accuracy).

1.5.7 Model Evaluation

After training, the model's performance is evaluated on the validation and/or test dataset to assess its ability to generalize to new, unseen data.

- Tasks:

- Evaluate model performance using metrics relevant to the problem (e.g., accuracy, precision, recall, RMSE).

- Compare performance across different models.

- Identify and address issues like overfitting or underfitting.

1.5.8 Hyperparameter Tuning

Hyperparameters are settings that control the behavior of the learning algorithm. Tuning these hyperparameters is critical to optimizing model performance.

- Tasks:

- Use techniques like grid search, random search, or Bayesian optimization to find the best hyperparameters.

- Validate the tuned model on the validation set.

- Avoid overfitting by using techniques like cross-validation.

1.5.9 Model Deployment

Once a model is trained and validated, it is deployed into production for real-world use. This involves integrating the model into an application where it can make predictions on new data.

- Tasks:

- Choose a deployment strategy (e.g., cloud, edge, on-premises).

- Implement model serving infrastructure (APIs, microservices).

- Monitor model performance in production and update as needed.

1.5.10 Model Monitoring and Maintenance

After deployment, continuous monitoring is necessary to ensure the model remains accurate and relevant. Models may require retraining or updating as new data becomes available.

- Tasks:

- Monitor model performance and accuracy over time.

- Detect and address data drift or model degradation.

- Retrain and update the model with new data as needed.

Conclusion

The machine learning workflow is an iterative process that involves defining the problem, collecting and preprocessing data, selecting and training a model, and finally deploying and maintaining the model in production. Each step is critical to ensuring that the machine learning model is effective, reliable, and capable of delivering meaningful results in real-world applications.