1.2 Historical Context and Evolution

Introduction

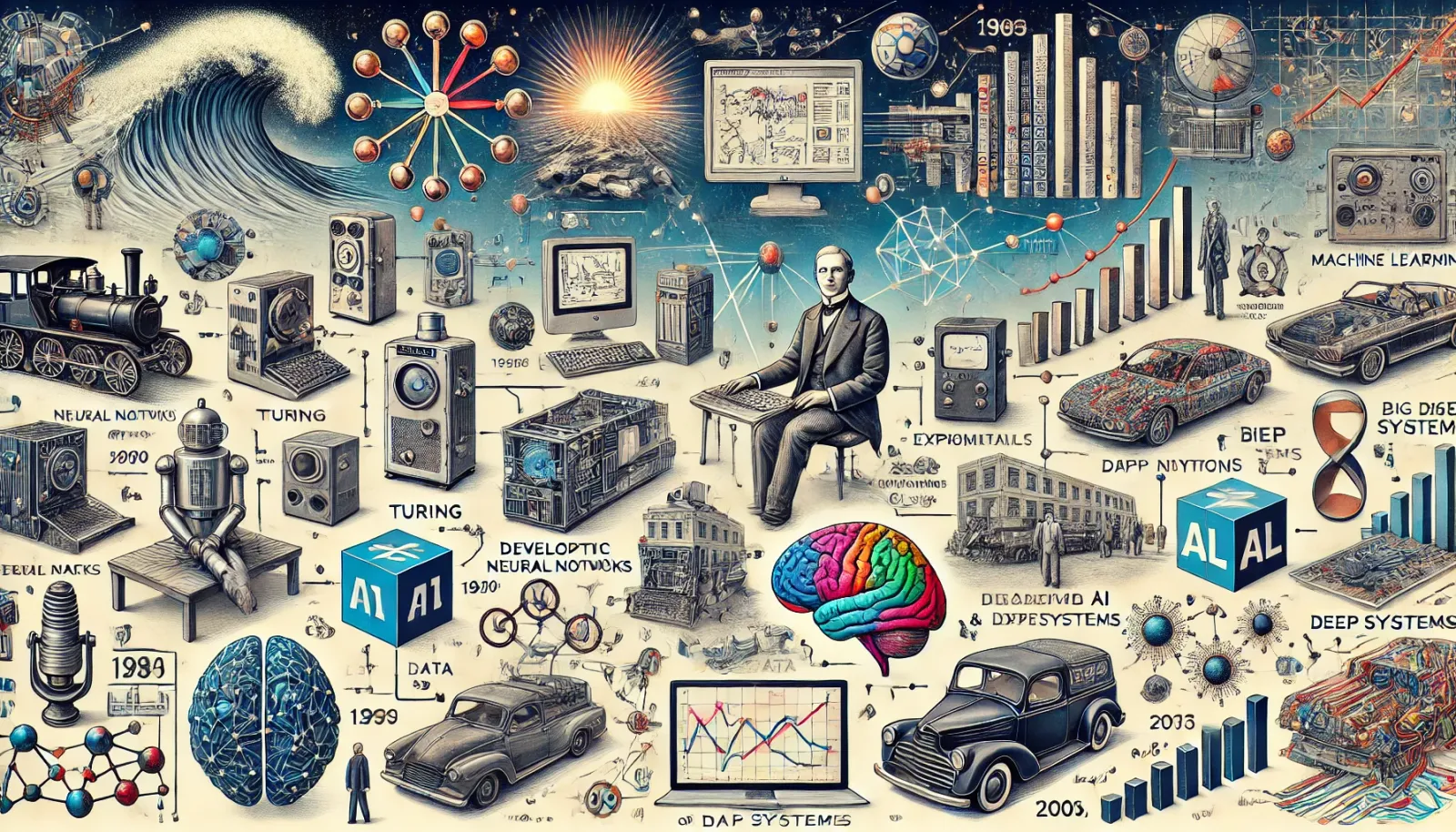

The field of Machine Learning (ML) has a rich history, evolving through significant milestones that have shaped its development into the powerful technology it is today. Understanding the historical context provides insight into the theoretical foundations and technological advancements that have contributed to the current state of machine learning.

Early Foundations (1940s - 1950s)

The origins of machine learning can be traced back to the early days of computing and artificial intelligence (AI). Some key milestones include:

- Alan Turing's Contributions: In 1950, British mathematician Alan Turing introduced the concept of a "learning machine" in his seminal paper "Computing Machinery and Intelligence." Turing's work laid the groundwork for the development of algorithms capable of learning from data.

- Hebbian Learning: In 1949, psychologist Donald Hebb proposed a theory of learning in biological systems, often summarized as "cells that fire together, wire together." This idea influenced early neural network models.

The Advent of Neural Networks (1950s - 1980s)

The development of neural networks was a significant step in the evolution of machine learning:

- Perceptron (1957): Frank Rosenblatt developed the perceptron, an early model of a neural network designed for pattern recognition. Although limited in capability, the perceptron was a foundational model in ML.

- Backpropagation Algorithm (1986): Geoffrey Hinton, David Rumelhart, and Ronald Williams introduced the backpropagation algorithm, which made training multi-layer neural networks feasible and significantly advanced the field.

Symbolic AI and Expert Systems (1960s - 1980s)

During this period, much of AI research focused on symbolic reasoning and expert systems:

- Expert Systems: These systems were designed to mimic human expertise in specific domains, using a set of predefined rules. While successful in certain applications, they lacked the ability to learn from data, which limited their adaptability.

Emergence of Machine Learning (1980s - 1990s)

The 1980s and 1990s saw a shift towards data-driven approaches, leading to the formalization of machine learning as a distinct field:

- Machine Learning Algorithms: The development of various algorithms, such as decision trees, support vector machines (SVM), and k-nearest neighbors (k-NN), provided new methods for data classification and prediction.

- Probabilistic Models: Bayesian networks and other probabilistic models gained prominence, allowing for better handling of uncertainty and complexity in data.

Rise of Big Data and Deep Learning (2000s - 2010s)

The 21st century has seen rapid advancements in machine learning, driven by the availability of large datasets and increased computational power:

- Big Data: The proliferation of data from the internet, social media, and sensors enabled the development of more sophisticated models that could learn from vast amounts of information.

- Deep Learning: The resurgence of neural networks, particularly deep learning techniques, revolutionized fields such as computer vision, natural language processing, and speech recognition. Breakthroughs such as AlexNet (2012) demonstrated the potential of deep learning to achieve human-level performance in certain tasks.

Modern Era and Future Directions (2010s - Present)

Machine learning continues to evolve, with ongoing research focusing on improving model interpretability, fairness, and efficiency:

- AI and ML Integration: The integration of machine learning with broader AI technologies has led to advances in areas such as reinforcement learning, generative models, and autonomous systems.

- Ethical and Societal Implications: As ML systems become more pervasive, there is growing attention to the ethical and societal implications of their use, including bias, privacy, and the impact on employment.